Some notes for myself… Just points to remember, I skip the overview and note the details that makes me grasp the concepts, usually why questions. Notice they are mostly my understanding from the lectures, and not intended to be a reference; I just type my understanding for future reference for myself.

What is ergodicity in Markov Chain?

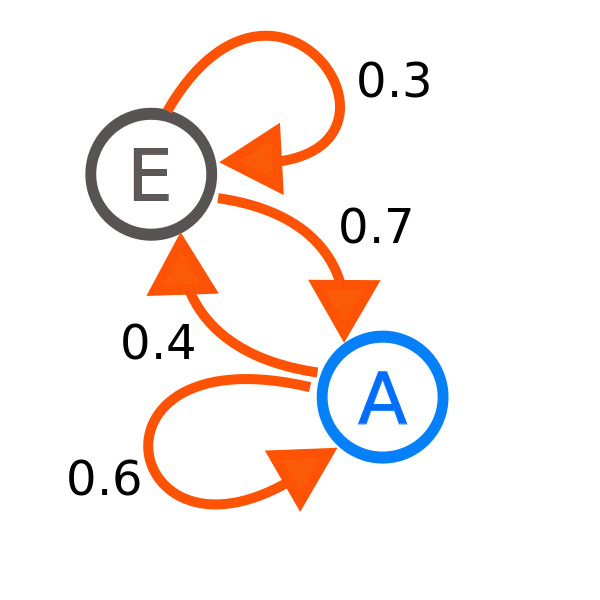

It’s the property which describes we can reach to every state in precisely t steps. Precisely means we need to ensure independent of the starting state, we can reach to any state in exactly t steps, which is finite. Therefore, for example if we can reach a state with only even number of time steps for some initial state, but not for odd number of time steps, we say it is not ergodic. For example this one is not ergodic:

What is a stationary distribution?

The distribution of the states after N number of steps in a Markov Chain where N goes to infinity. It’s like the steady state in circuit theory, but for the probability to be in a specific state. This is nothing but the solution to equation P*x=x where x represents the Markov Chain steps. If all probabilities are greater than 1 in x, we see this is independent of the initial state.

Detailed balance equation: pi(x)*P(x’|x)=pi(x’)*P(x|x’) where pi is the stationary distribution and P(x’|x) is the probability to go from state x to state x’.

Metropolis, Hastings theorem: The stationary distribution is 1/Z*Q(x). The proof is straightforward actually; since detailed balance equation exists, we now that we can design a Markov Chain with 1/Z*Q(x). The stationary distribution is unique and independent of the starting position.

What is Metropolis, Hastings theorem?

It is the idea to approximate P(x) = 1/Z*H(x) distribution with a Markov Chain. We have 3 distributions:

1. P(x): the actual distribution we’d like to approximate

2. Q(x): the approximation to P(x)

3. G(x): the transition distribution, probability notation for the Markov Chain

The idea is to guess G(x) which approximates the P(x) in the stationary distribution. The results are checked using Q(x) and the state updates use this approximation for state changes.

More clearly, the algorithm is,

What is acceptance rate in Metropolis, Hastings theorem?

Acceptance rate is actually the rate which helps us simply accept or reject the state transition. If the rate is equal to or greater than 1, we simply do the transition whereas if it is smaller than 1, we do the state change with that probability, e.g. P(state changed) = alpha.

What is Gibbs Sampling?

I think this video is pretty clear explaining it.

It is basically sampling from conditional distribution consecutively for all random variables one by one. The trick is joint distribution is difficult to sample, but the conditional ones are easy to sample.

Basically:

Conditions:

1. Sampling P(x,y) is difficult

2. Sampling P(x|y) and P(y|x) is easy

Algorithm:

cond_dist1 = Gaussian(some_mu, some_covariance)

cond_dist2 = Gaussian(some_mu, some_covariance)

N = 10 # N is some number to sample

x = [0] * N

y = [0] * N

for counter in range(N-1):

x[counter+1] = cond_dist1.sample(y=y[counter])

# I think there are two variants here

# we can either use the sampled value

# from above line or the default ones

# I use the latter one here

y[counter+1] = cond_dist2.sample(x=x[counter])

# This is our distribution!

plot(x, y)References:

[1] Wikipedia

[2] Metropolis-Hastings YouTube

[3] Gibbs Sampling Youtube

Leave a Reply